Model Overview

Advanced facial expression analysis system utilizing the Facial Action Coding System (FACS) to detect and measure emotional states and stress levels in real-time

Key Features

- Real-time FACS analysis

- Multi-AU detection and tracking

- Stress & anxiety assessment

- Visual analysis output

- Action Unit intensity measurement

- Temporal pattern analysis

- Clinical-grade accuracy

- Cross-cultural validation

- Micro-expression detection

- Automated reporting

- Privacy-preserving processing

Performance Metrics

Response Time

Accuracy

AU Detection

Emotion Detection

Supported Emotions

Input Operational

Video Processing Limitations

- Requires clear video input

Minimum 720p resolution, 30fps, good lighting conditions

- Face visibility requirements

Face must be clearly visible and within 45° angle from front

System Monitoring Requirements

- Best for continuous monitoring

Optimal analysis period: 30+ minutes for baseline establishment

Clinical Usage Limitations

- For medical diagnosis (Clinical Trials in progress)

Clinical trials completion expected Q1 2025

API Implementation Guide

Integration example using our Python SDK:

from dyagnosys import FacsAnalyzer

def analyze_expression(video_stream):

analyzer = FacsAnalyzer()

# Initialize real-time analysis

analyzer.start_stream(video_stream)

# Configure detection parameters

analyzer.set_detection_threshold(0.85)

analyzer.enable_temporal_smoothing(True)

# Get real-time results

while True:

aus = analyzer.get_current_aus()

emotions = analyzer.interpret_emotions(aus)

yield emotionsVideo Analysis

FACS-Based Emotion Recognition

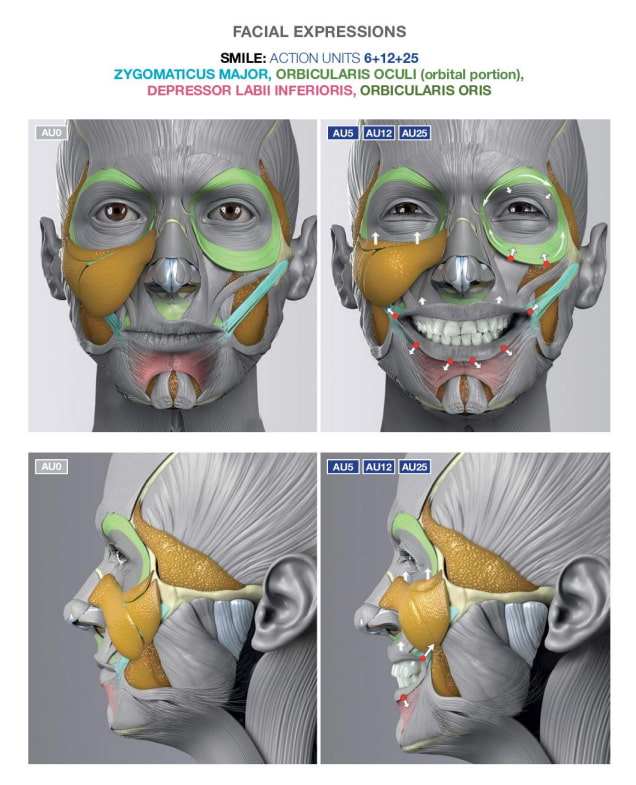

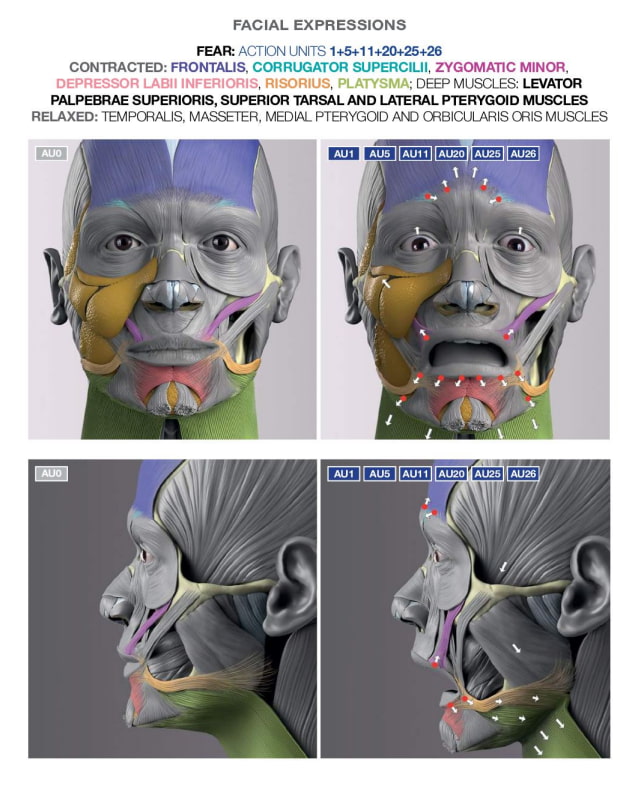

Our FACS-based emotion recognition system analyzes facial action units to detect and quantify seven core emotions: Joy, Sadness, Anger, Fear, Surprise, Disgust, and Contempt.

Emotion Mappings

Research Based Development

The FACS Analysis System is grounded in extensive academic research and clinical validation studies, reflecting its reliability and adaptability across diverse applications.

Foundational Research

The system builds upon the Facial Action Coding System (FACS) developed by Paul Ekman and Wallace V. Friesen. This standardized system for describing facial movements has been extensively validated through decades of psychological research and serves as the foundation for modern automated facial expression analysis. Recent advancements have demonstrated its utility in predicting emotional states such as depression, anxiety, and stress through machine learning applications.

- Ekman, P., & Friesen, W. V. (1978). Facial Action Coding System . Consulting Psychologists Press

- Gavrilescu, M., & Vizireanu, N. (2019). Predicting Depression, Anxiety, and Stress Levels from Videos Using the Facial Action Coding System . Sensors

Clinical Applications

Integration of FACS analysis in clinical settings has demonstrated significant value in psychological assessment and mental health monitoring. Recent studies highlight its effectiveness in identifying subtle facial expression patterns correlated with psychological states, enabling objective evaluations in areas such as depression, anxiety, and stress. Additionally, its non-invasive nature enhances its adaptability in therapeutic and diagnostic settings.

- Cohn, J. F., et al. (2007). Observer-based measurement of facial expression with the Facial Action Coding System . Oxford University Press

Application Areas

Explore the various applications and use cases of our analysis system.

Applications

Clinical Psychology

Support mental health assessment and monitoring

Research

Facilitate emotion and behavior studies

Mental Health Monitoring

Continuous assessment of stress and anxiety levels

Corporate Wellness

Enhance employee well-being through emotion tracking

Education and Training

Improve learning experiences by understanding student emotions

Security and Surveillance

Detect suspicious behavior through facial expression analysis

Human-Computer Interaction

Enhance user experiences by adapting to emotional states